Introduction

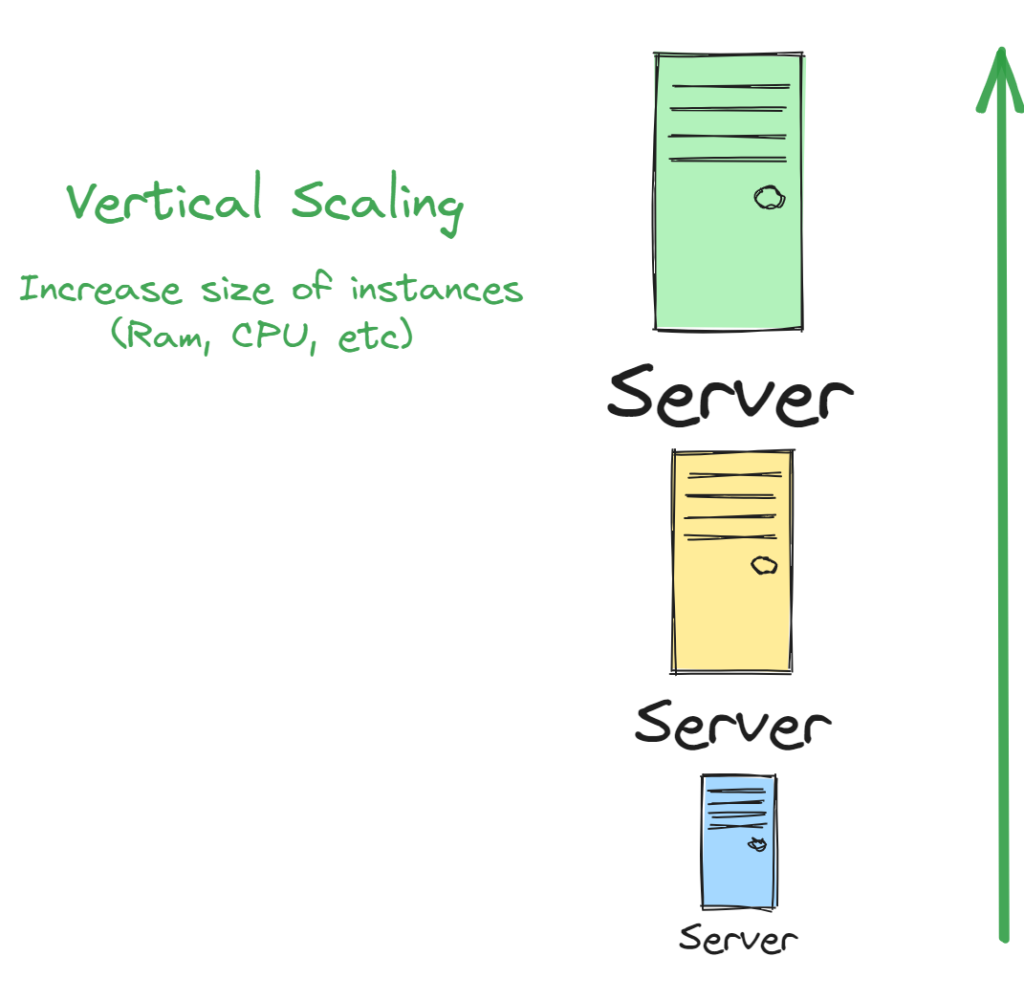

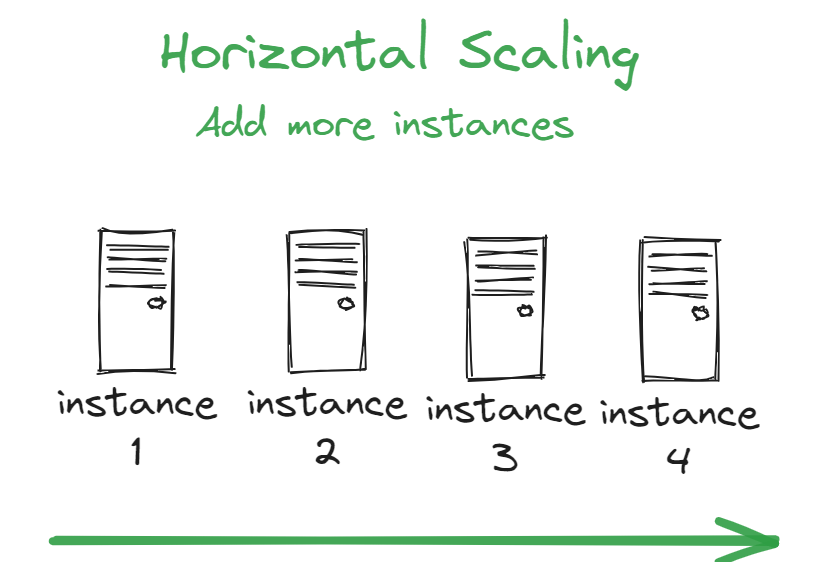

Scaling refers to expanding an application or system to handle increases in traffic, data, or usage. Vertical and horizontal scaling represent two core strategies for growth.

Vertical scaling involves increasing the power of individual servers. Horizontal scaling means distributing load across an increasing number of servers.

In this comprehensive guide, we’ll dive deep into vertical scaling vs horizontal scaling approaches. You’ll learn the pros and cons of both, when each is appropriate, and how to combine them. By mastering scaling techniques, you can build applications ready for future growth. Let’s get started!

Vertical and Horizontal Scaling:

What is Vertical Scaling?

Vertical scaling means increasing the specifications and resources of a single server instance. Forms of vertical scaling include:

- Adding more CPUs for parallel processing

- Increasing RAM for larger in-memory caches

- Using faster CPUs for higher throughput

- Expanding storage space with larger hard drives

- Upgrading network interface cards for higher bandwidth

For example, you might upgrade a database server from 2 to 4 CPUs and from 8GB of RAM to 16GB. This makes that single node more powerful without adding more nodes.

Benefits of Vertical Scaling

There are a few advantages to scaling vertically by beefing up servers:

- Simplicity – No architectural changes needed to code. Just update the CPU, RAM, etc and restart the upgraded server.

- Latency – Adding resources to a single server keeps data local and avoids network calls between nodes. This can improve latency.

- Cost savings – Can be lower cost than scaling out multiple commodity servers.

So in situations where a quick performance boost or size increase is needed on a budget, vertical scaling may provide the quickest gains.

Drawbacks of Vertical Scaling

However, there are also some downsides to consider with vertical scaling:

- Limited scalability – There are physical limits to how much you can expand a single server. Diminishing returns are also common with more resources.

- No redundancy – A single beefed up node creates a single point of failure. If that server crashes, service is fully disrupted.

- Vendor lock-in – Specialized larger servers often rely on proprietary hardware/software. This can limit portability.

- Migration difficulties – Moving or upgrading an overloaded, monolithic server is challenging.

- Cost – Large, high-end servers become exponentially expensive.

So while vertical scaling offers a fast first step, it has major capacity, reliability, and cost limitations long term.

When is Vertical Scaling Appropriate?

Given its tradeoffs, vertical scaling is best for:

- Meeting sudden traffic spikes

- Gaining a quick performance boost

- Handling rapidly increasing datasets

- Optimizing budget-constrained projects

- Supplementing horizontal scaling as needed

It serves well as an initial tactical measure while longer term horizontal scaling is planned.

What is Horizontal Scaling?

Horizontal scaling means increasing capacity by adding more servers or instances. This includes:

- Adding more app servers

- Adding more database read replicas

- Expanding to more availability zones

- Adding more caches

- Load balancing across more nodes

Rather than one beefy server, horizontal scaling uses many smaller commodity servers.

Benefits of Horizontal Scaling

Horizontal scaling unlocks greater reliability, resiliency, and cost benefits:

- Redundancy – Downtime is avoided if one instance fails since traffic goes to the remaining servers.

- Cost efficiency – Commodity servers are cheaper allowing incremental growth.

- Performance – Load is distributed across many CPUs and can further parallelize.

- Scalability – Continue expanding outwards with more nodes.

- Geographic distribution – Nodes can spread closer to users globally.

By utilizing horizontal scale out, applications can achieve web-scale capacity.

Drawbacks of Horizontal Scaling

There are also some downsides to horizontal scaling to consider:

- Complexity – Architectures, networking and traffic distribution get more complex.

- Data consistency – Nodes need to synchronize writeable data across servers.

- Testing – Validating distributed systems is harder than monoliths.

- Operational costs – Managing many servers drives more operational overhead.

- Multi-tenancy – On shared infrastructure, noisy neighbors impact performance.

With good engineering, these can be managed. Overall horizontal scaling unlocks way more growth potential.

When is Horizontal Scaling Appropriate?

Horizontal scale out excels in:

- Supporting exponential traffic increases

- Achieving high reliability and uptime

- Delivering content globally

- Managing big data and compute workloads

- Building loosely coupled architectures

- Taking advantage of cloud elasticity

It’s the go-to for serious scale when vertical scaling caps out.

Hybrid Vertical + Horizontal Scaling

In most real-world systems, a combination of vertical and horizontal scaling is used:

- Start with vertical – Initially make servers more powerful to support growth on a budget with simpler ops.

- Transition to horizontal – Once diminishing returns hit, move towards copying out scaled instances.

- Supplement with vertical – When bottlenecks hit specific nodes like databases or messaging queues, scale them up.

- Optimize selectively – Choose what resources to scale based on data patterns.

Blending both methods utilizes their complementary strengths.

Horizontal Scaling Techniques

Scaling out brings additional architectural considerations:

Stateless vs Stateful Designs

Stateless applications don’t store client data locally, making them easier to distribute requests across.

Stateful applications require syncing writeable storage which adds complexity.

When possible, leverage stateless designs for simpler scaling.

Load Balancing

Load balancers route traffic across multiple backend application instances. Common balancing strategies:

- Round robin – Distribute requests sequentially

- Least connections – Send to node with fewest open connections

- Hash-based – Ensure same client hits same node

- Performance-based – Send to fastest responding node

Combine strategies to optimize distribution.

Database Scaling

Scaling databases introduces challenges like:

- Read/write splitting – Isolate reads and writes

- Replication – Duplicate data across nodes

- Sharding – Partition data across clustered servers

- NoSQL – Adopt distributed database architectures

Plan data models and access patterns accordingly.

Cloud Scaling Considerations

Cloud platforms like AWS make scaling easier through:

- Auto Scaling Groups – Automatically add or remove instances based on demand.

- Load Balancers – Managed load balancers seamlessly distribute traffic.

- Serverless – Functions and databases that auto-scale built-in.

- Global infrastructure – Launch resources closer to users around the world.

- Configuration management – Create server templates for easy replication.

- Pricing model – Pay-as-you-go pricing based on usage and resources.

Leverage cloud capabilities to simplify scaling.

On-Premise Scaling Considerations

Scaling traditional on-premise infrastructure poses challenges like:

- Manual server capacity planning and procurement cycles

- Lacking load balancer automation capabilities

- Geographic expansion requires setting up new data centers

- Scaling hardware tied to big fixed costs

- Interruptions to update and expand servers

While doable, scaling on-premise requires more performance benchmarking and workload projections.

Monitoring and Instrumentation

Robust monitoring and metrics provide the data necessary to scale proactively:

- Application performance metrics – Requests, latency, errors

- Server metrics – Memory, CPU, disk, network, load

- Database metrics – Connections, cache hit rate, replication lag

- Cluster metrics – Node status, container resource usage

- Business metrics – Revenue, users, growth trajectories

Analyze metrics streams to guide scaling decisions.

Conclusion

Mastering vertical and horizontal scaling strategies enables smoothly growing applications from startup to enterprise scale.

Intelligently combine vertical scaling for quick returns and horizontal scale out for long-term capacity. Monitor key metrics and leverage cloud elasticity to scale sustainably.

With the right architecture and infrastructure, your applications can thrive from a few users to millions worldwide. Now get out there, plan ahead, and scale fearlessly!

Frequently Asked Questions

Q: What are key indicators an application needs to be scaled?

A: Performance issues under load, traffic exceeding capacity, slow database queries, unavailability during peaks, and infrastructure costs exceeding revenue all indicate scaling is needed. Monitor for triggers.

Scaling becomes necessary when traffic, data, or usage outpaces existing capacity and leads to bottlenecks. Key triggers include degraded performance, outages, slow databases, and spikes overwhelming servers. Robust monitoring provides data to guide scaling decisions proactively.

Q: When does it make sense to adopt microservices vs scaling monoliths?

A: When monolithic architectures become overly complex and unreliable with size, breaking services into independently scalable microservices simplifies. But avoid prematurely microservicing.

Microservices architecture breaks applications into decentralized single-responsibility services. This enables independent scaling as needed. Adopt microservices when monoliths exhibit unwieldy complexity, unreliable performance, slow release velocity, and difficulty scaling. But don’t over-microservice prematurely before stability and patterns emerge.

Q: What are disadvantages or tradeoffs of horizontal scaling?

A: Scaling out introduces complexity around correctly distributing stateful data, network latency between nodes, and orchestrating many servers and services. It also drives operational overhead.

While horizontal scaling unlocks greater capacity, it also introduces challenges like data synchronization, distributed networking, and orchestrating many instances. Testing and monitoring complex distributed systems is harder. Operational costs also rise from managing many servers. Weight complexity versus benefits.

Q: How can applications be optimized for horizontal scaling?

A: Stateless designs, asynchronous processing, database read replicas, caching, and horizontal partitioning allow harnessing distributed architectures. Refactoring may be needed to support scale-out.

Scaling out requires that applications correctly leverage distributed resources. Stateless designs, asynchronous task handling, read replicas for databases, and caching avoid creating single points of contention. Applications may need refactoring to shard datasets and distribute work effectively.