Introduction

JavaScript generators are a powerful feature that allows creating lazy iterators – functions that can be paused and resumed to efficiently work with sequences or streams of data.

In this guide, we’ll learn the basics of generators and then explore practical use cases like streaming file data, implementing state machines, processing infinite sequences, and more.

By mastering generators, you can write more efficient and declarative JavaScript code to elegantly handle complex workflows and data streams. Let’s dive in!

JavaScript Generators Basics

A generator is a special function declared using the function* syntax:

function* myGenerator() {

// ...

}Calling it returns a Generator object instead of running the body. This object exposes methods to iterate through the generator:

const genObj = myGenerator();

genObj.next(); // Start execution

genObj.next(); // Resume where last pausedThe key advantage is the generator can yield at any point to pause execution and return a value:

function* counter() {

let count = 1;

while (true) {

yield count;

count++;

}

}

const gen = counter();

gen.next(); // { value: 1, done: false }

gen.next(); // { value: 2, done: false }Each yield pauses the function execution and returns the yielded value. Execution resumes on the next next() call.

This allows iterating or resuming generators from multiple points instead of single entry and exit like normal functions.

JavaScript Generators open up powerful async flow control capabilities which we’ll explore next.

Consuming JavaScript Generators with for-of Loop

The easiest way to consume a generator is using a for-of loop:

function* counter() {

let i = 0;

while (i < 5) {

yield i++;

}

}

for (let val of counter()) {

console.log(val); // 0, 1, 2, 3, 4

}This repeatedly calls next() on the generator and loops till done. The yielded values get assigned to the loop variable.

For-of loops handle asynchronously iterating generator values out of the box.

Generator Return Methods

In addition to next(), generators have return() and throw() methods:

function* gen() {

yield 1;

yield 2;

yield 3;

}

const g = gen();

g.next(); // { value: 1, done: false }

g.return('foo'); // { value: "foo", done: true }

g.next(); // { value: undefined, done: true }return() ends generator execution and returns value. Further next() calls return done.

throw() throws an error inside the generator for error handling:

function* gen() {

try {

yield 1;

yield 2;

yield 3;

} catch (err) {

console.log(err);

}

}

const g = gen();

g.next();

g.throw('Error!'); // Logs "Error!"Generator instance exposes the iterable protocol so we can leverage utility methods like forEach():

function* gen() {

yield 1;

yield 2;

yield 3;

}

const g = gen();

g.forEach(x => console.log(x)); // Logs 1, 2, 3Generator Delegation

Generators can delegate to other generators using yield*:

function* foo() {

yield 1;

yield 2;

}

function* bar() {

yield 3;

yield* foo();

yield 4;

}

for (let v of bar()){

console.log(v);

// 3, 1, 2, 4

}When yield* encounters a generator, it iterates that fully before continuing. Useful for generator composition.

Practical Generator Examples

Now that we’ve covered the fundamentals of generators, let’s look at some practical use cases taking advantage of these capabilities:

1. Processing Large Datasets using Generator

Generators allow lazily operating on large datasets without loading everything into memory:

function* processLargeFile(file) {

const buffer = Buffer.alloc(1024); // 1kb

let bytesRead = 0;

while(bytesRead = yield fs.read(file, buffer, 0, 1024)) {

// Process buffer

processData(buffer);

}

}

for (let chunk of processLargeFile('data.bin')) {

// Iterates file and processes buffer per yield

}This incrementally yields file chunks for external processing instead of internally buffering gigabytes.

2. Implementing Streaming APIs using Generators

Generators in JavaScript enable creating streaming APIs that deliver data progressively:

function createResponseStream(request) {

function* streamResponse() {

yield writeHead(200);

yield writeChunk('Hello ');

setTimeout(() => {

yield writeChunk('World!');

yield endResponse();

}, 2000);

}

return streamResponse();

}

const responseStream = createResponseStream();

for (let chunk of responseStream) {

send(chunk);

}Here the generator yields each response chunk allowing sending them asynchronously over time rather than buffering the full response.

Streaming generators are memory-efficient and minimize latency.

3. Queuing Asynchronous Tasks

Generators in JavaScript are handy for queueing tasks for asynchronous execution:

function* taskQueue() {

const task1 = async () => { /* ... */ };

const task2 = async () => { /* ... */ };

yield task1(); // Execute task 1

yield task2(); // Then execute task 2

return 'Done';

}

const queue = taskQueue();

// Process queue continuously

async function scheduler() {

let result;

do {

result = await queue.next();

} while (!result.done);

}

scheduler();This queues tasks without needing to manage callback nesting or Promise sequences. Execution resumes as each asynchronous operation completes.

4. Implementing Throttling

JavaScript Generators allow throttling concurrent operations to limit rate:

function* throttler(maxOps) {

let ops = 0;

while(true) {

if(ops < maxOps) {

let res = yield; // Pause and wait for operation

ops++; // Add to running total

console.log(res);

} else {

yield wait(100); // Wait if too many ops

}

ops--; // Complete operation

}

}

const throttle = throttler(2); // Limit to 2 concurrent

throttle.next('op1');

throttle.next('op2');

throttle.next('op3'); // Waits...The generator blocks on yield wait() until under concurrency limit. This provides a clean throttling mechanism.

5. Implementing Cooperative Multitasking

Generators enable cooperative “multitasking” by interleaving execution:

const gen1 = someJob();

const gen2 = someOtherJob();

function scheduler() {

let result = gen1.next();

if(!result.done) {

let result2 = gen2.next();

// Keep switching between generators

if(!result2.done) {

result = gen1.next();

}

}

}This allows basic simulated multitasking by resuming different generators on each iteration. More advanced libraries like co build on this idea.

As we can see, generators act as an elegant async/lazy control structure with many applications from data streaming to concurrency throttling.

Using Javascript Generators Today

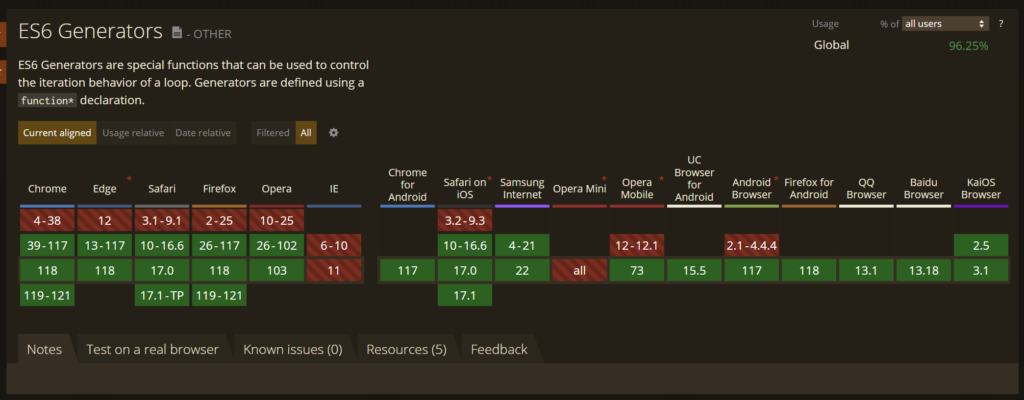

Generators have wide support in modern browsers and Node.js, requiring no transpiling:

Additionally, the popular utility library Lodash includes many generator adaptations:

_.takeWhile()_.tap()_.throttle()

These leverage generators for lazy sequences, branching, concurrency control etc.

So generators are readily usable today to build efficient asynchronous JavaScript programs.

Comparison with Callbacks, Promises and Async/Await

How do generators compare with other async patterns in JavaScript?

Callbacks have straightforward declarative control flow but heavily nested callbacks (callback hell) can make code difficult to reason about.

Promises sequence async actions linearly avoiding nesting. Chaining makes control flow easy to follow. But orchestrating parallel, complex flows requires effort.

Async/await provides the easiest imperative async code by making promises look synchronous. But it’s still fundamentally based on linear promise chains.

Generators have the advantage of lazy execution and can pause/resume operations. This makes complex non-linear workflows more declarative without temporal coupling.

So in summary:

- Callbacks excel at linear async but badly handle complex flows

- Promises improve imperative sequencing but remain linear

- Async/await makes promises look sync but retains limitations

- Generators minimize sequencing constraints for more declarative async code

Each pattern has strengths for managing async logic based on tradeoffs.

Conclusion

JavaScript generators provide a powerful mechanism for flow control, data streaming, implementing concurrency primitives like throttling and queues, and modeling cooperative multitasking.

Key takeaways:

- Generators return iterable objects that can pause and resume execution

yieldreturns a value and pauses the generator functionfor-ofloops provide the canonical way to iterate generators- Generator methods like

throw()andreturn()handle errors and early termination yield*delegates to other generators enabling composition- Generators enable streaming data, queueing tasks, throttling etc.

Generators lift constraints around linear, imperative control flow prevalent in callbacks, promises and async/await. The ability to pause and resume processing based on application logic opens up many capabilities.

JavaScript generators provide a declarative abstraction well-suited for today’s asynchronous applications dealing with event streams, user inputs and distributed data flows. I encourage you to try applying generators to make your async code simpler and more readable!

Frequently Asked Questions

How do generators help with memory management in JavaScript?

Generators can improve memory management in JavaScript by:

- Processing large datasets iteratively vs buffering into memory

- Streaming data as it becomes available rather than all at once

- Pausing execution when inactive to release resources

- Throttling workload based on available memory

So generators allow lazier, more memory efficient asynchronous JavaScript code.

Can generators be used for synchronous code flows?

Yes, generators are equally useful for synchronous lazy generation of sequences or pull-based collection processing:

- Produce seated ticket combinations for a stadium

- Read CSV rows avoiding buffering full file

- Traverse nodes depth-first in DOM tree

- Build permutations or combinations generator

Anywhere data can be lazily produced or traversed, generators apply.

What are some key generator libraries and abstractions in JavaScript?

Some useful generator libraries:

- Koa – Web framework using generators for requests

- Redux-Saga – Manage Redux side effects using generators

- David – Promisified generators with await support

- Bluebird – Async generator and coroutine support

- Puppeteer – Browser automation using generators

These provide higher-level abstractions leveraging generators under the hood.

How do Python coroutines and generators compare to JavaScript generators?

Python generators are broadly similar with yield pausing execution and next() resuming. Key differences:

- Python generators produce values but can’t receive them

- JavaScript generators are bidirectional with

next(value) - Python has coroutines with

awaitandasync - Python generators throw StopIteration exceptions when done

So JavaScript generators have a bit more flexibility being bidirectional.

What are the disadvantages of using generators?

Some downsides of generators:

- More conceptual overhead compared to simpler patterns like callbacks

- Lose some static checking/tooling without concrete function signatures

- Debugging can be harder with multiple paused states

- Heavier memory usage with unbounded iterators

- Can make control flow harder to reason about

So use judiciously only where complexity justifies – don’t overapply!